Is It Time to Stop Chatting with Your AI Assistant?

Explorations on generative AI

Woman having a conversation with a droid-style robot in a cafe, Stable Diffusion 1.5

In my 15-year career as a software engineer, I've seen my share of iterations of AI technologies and their user interfaces. In 2008, I started Astrid, a personal to-do list app, giving it a human name and a squid mascot to make the app feel like a personal assistant. Later, I spent a few years at Yahoo working on AI-driven conversational user assistance. Over the past six months, like many of you, I've been experimenting with building products using Large Language Models (LLMs). In particular, I've been exploring ways users can interact and co-create with these tools.

Today, I want to talk about the practice of naming & anthropomorphizing AI, a necessary topic given the advancements in AI technologies like ChatGPT.

Most of us have had some experience with AI avatars at some point (Hi Clippy). I believe the original reason this metaphor was used was to make users feel more at comfortable with computers and software.

With the advent of large language models (LLMs) like ChatGPT, AI has become increasingly capable of responding like humans. However, this development has also highlighted the issues that arise from over-anthropomorphizing AI.

Uncanny Valley effect

(The Uncanny Valley effect is a phenomenon where something that appears almost human can elicit feelings of unease or revulsion.) As AI becomes more human-like, users may start to treat it like a human, which can lead to confusion and discomfort when the AI falls short of human expectations. This can break the user's "interaction model" and lead to frustration and distrust.

Lack of understanding of capabilities

Moving the entire experience of a product to the backend, as with a chat interface, also causes users to have a lack of understanding of the AI's capabilities. Does this AI search the internet? Have access to my files or calendar? Can it read all my code, or just some of it? Developers and product people will love the ability to ship features without having to build and clutter up the UI, but for users, it's difficult to know how AI chat interfaces work without experimentation, and impossible to know when and how the AI changes over time.

Blank box problem

A related problem is around discovery - one of the major criticisms of chat as an interface is the difficulty users experience when faced with a blank text box. There's a lack of visual affordances helping users engage with the product, leading to choice paralysis and forcing users to express their intent in words to see if it's possible to execute. This is possible to mitigate with conversation shortcut buttons and other UI elements, but you can end up in a "worst of both worlds" situation where there's both buttons and chat, leading users to assume that the AI can only handle the presented use-cases.

Chat is inefficient

While natural language interactions can be helpful, they're not always the most efficient way to communicate. Humans are naturally drawn to visual elements and stories, which chat-based AI often lacks. It's hard to become an expert at navigating a chat-driven system as well since users often don't discover the "shortcut to the answer" at the end of a conversation. Even if it is explicitly mentioned (e.g. "next time you can ask for a pie chart of your sales data"), many users learn by doing, not reading.

Chat is powerful

However, there are absolutely times where chat is the best medium, for example, when users are hands-free or away from their computer (e.g. in a car or while in bed). Another situation is for iterative exploration - the history of a conversation can be helpful to backtrack and branch - with ChatGPT, I'll often go back and edit a previous response to explore a different conversation path. In these cases, it helps to see chat as a software interaction pattern and not as a conversation, to make things like branching and revising history more understandable.

Takeaway

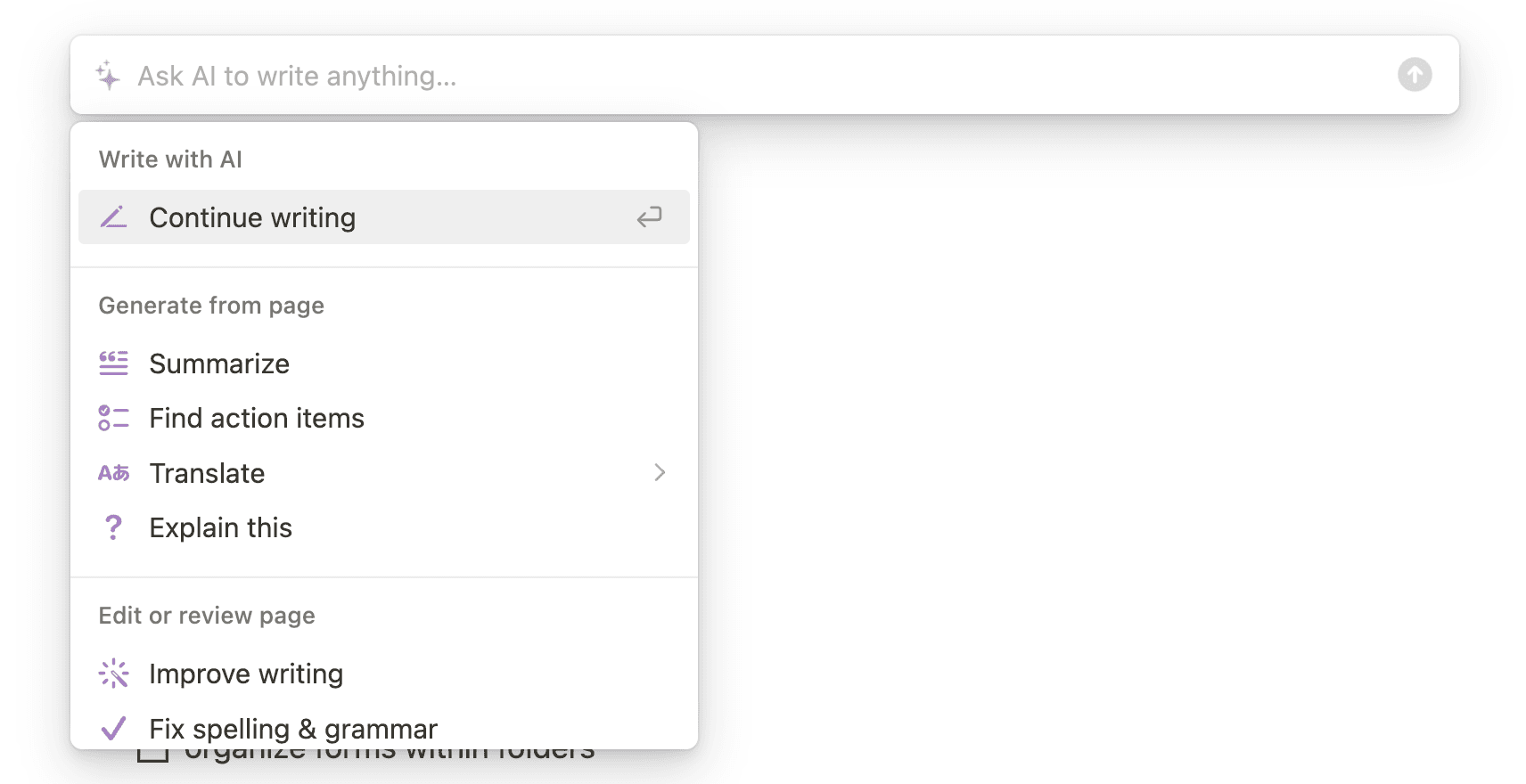

Products like Notion are experimenting with command palettes and other ways to interact with AI. This is great - assistance doesn't have to take the shape of conversation.

That said, I think there's still a place for chat in AI tools, especially while AI is wrong - users need to be able to quickly correct AI output to minimize frustration.

Another key is being able to adapt the modality to the user's needs - free text, audio conversation, interactive UI, commands palettes. Adapting to the user's needs flexibly is a new strength of LLM-powered systems and should guide our product development strategies moving forward.

I'm especially looking forward to seeing what new products and experiences we can build when LLMs are combined with other multi-modal AI technologies in the future.